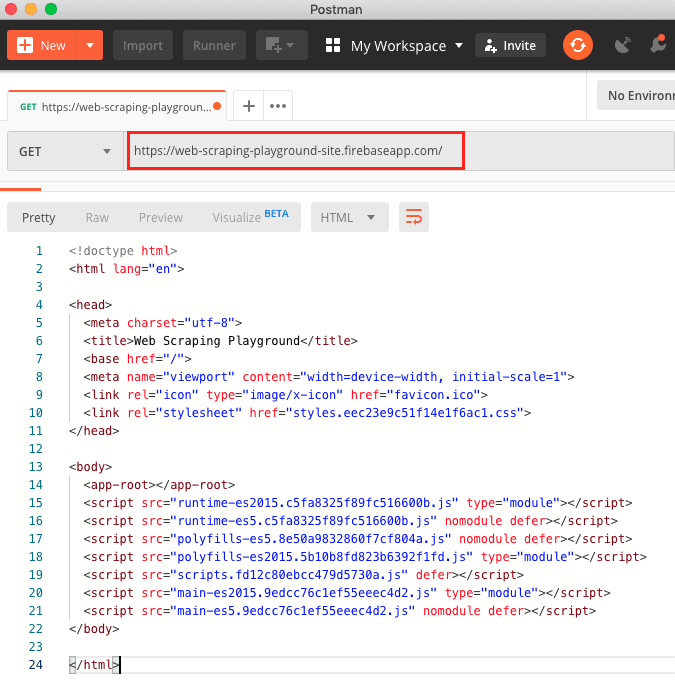

Web scraping is a complex task and the complexity multiplies if the website is dynamic. According to United Nations Global Audit of Web Accessibility more than 70% of the websites are dynamic in nature and they rely on JavaScript for their functionalities. 3、安装完成后在顶部工具栏显示 Web Scraper 的图标。 本地安装方式 1、打开 Chrome,在地址栏输入 chrome://extensions/ ,进入扩展程序管理界面,然后将下载好的扩展插件 Web-Scraperv0.3.7.crx 拖拽到此页面,点击“添加到扩展程序”即可完成安装。如图:. Web scraper to get news article content. We'll build a simple web scraper that returns the content of a news article when given a specific URL. Some examples of products which use similar technologies include price-tracking websites and SEO audit tools.

Web scraping relies on the HTML structure of the page, and thus cannot be completely stable. When HTML structure changes the scraper may become broken. Keep this in mind when reading this article. At the moment when you are reading this, css-selectors used here may become outdated.

Scraping allows transforming the massive amount of unstructured HTML on the web into the structured data. A good scraper can retrieve the required data much quicker than the human does. In the previous article, we have built a simple asynchronous web scraper. It accepts an array of URLs and makes asynchronous requests to them. When responses arrive it parses data out of them. Asynchronous requests allow to increase the speed of scraping: instead of waiting for all requests being executed one by one we run them all at once and as a result we wait only for the slowest one.

It is very convenient to have a single HTTP client which can be used to send as many HTTP requests as you want concurrently. But at the same time, a bad scraper which performs hundreds of concurrent requests per second can impact the performance of the site being scraped. Since the scrapers don’t drive any human traffic on the site and just affect the performance, some sites don’t like them and try to block their access. The easiest way to prevent being blocked is to crawl nicely with auto throttling the scraping speed (limiting the number of concurrent requests). The faster you scrap, the worse it is for everybody. The scraper should look like a human and perform requests accordingly.

A good solution for throttling requests is a simple queue. Let’s say that we are going to scrape 100 pages, but want to send only 10 requests at a time. To achieve this we can put all these requests in the queue and then take the first 10 quests. Each time a request becomes complete we take a new one out of the queue.

Queue Of Concurrent Requests

For a simple task like web scraping such powerful tools like RabbitMQ, ZeroMQ or Kafka can be overhead. Actually, for our scraper, all we need is a simple in-memory queue. And ReactPHP ecosystem already has a solution for it: clue/mq-react a library written by Christian Lück. Let’s figure out how can we use it to throttle multiple HTTP requests.

First things first we should install the library:

Well, here the problem we need to solve:

create a queue of HTTP requests and execute a certain amount of them at a time.

For making HTTP queries we use an asynchronous HTTP client for ReactPHP clue/buzz-react. The snippet below executes two concurrent requests to IMDB:

Now, let’s perform the same task but with the queue. First of all, we need to instantiate a queue (create an instance of ClueReactMqQueue). It allows to concurrently execute the same handler (callback that returns a promise) with different (or same) arguments:

In the snippet above we create a queue. This queue allows execution only for two handlers at a time. Each handler is a callback which accepts an $url argument and returns a promise via $browser->get($url). Then this $queue instance can be used to queue the requests:

In the snippet above the $queue instance is called as a function. Class ClueReactMqQueue can be invokable and accepts any number of arguments. All these arguments will be passed to the handler wrapped by the queue. Consider calling $queue($url) as placing a $browser->get($url) call into a queue. From this moment the queue controls the number of concurrent requests. In our queue instantiation, we have declared $concurrency as 2 meaning only two concurrent requests at a time. While two requests are being executed the others are waiting in the queue. Once one of the requests is complete (the promise from $browser->get($url) is resolved) a new request starts.

Scraper With Queue

Here is the source code of the scraper for IMDB movie pages built on top of clue/buzz-react and Symfony DomCrawler Component:

Class Scraper via method scrape($urls) accepts an array of IMDB URLs and then sends asynchronous requests to these pages. When responses arrive method extractFromHtml($html) scraps data out of them. The following code can be used to scrape data about two movies and then print this data to the screen:

If you want a more detailed explanation of building this scraper read the previous post “Fast Web Scraping With ReactPHP”.

From the previous section we have already learned that the process of queuing the requests consists of two steps:

- instantiate a queue providing a concurrency limit and a handler

- add asynchronous calls to the queue

To integrate a queue with the Scraper class we need to update its method scrape(array $urls, $timeout = 5) which sends asynchronous requests. At first, we need to accept a new argument for concurrency limit and then instantiate a queue providing this limit and a handler:

As a handler we use $this->client->get($url) call which makes an asynchronous request to a specified URL and returns a promise. Once the request is done and the response is received the promise fulfills with this response.

Then the next step is to invoke the queue with the specified URLs. Now, the $queue variable is a placeholder for $this->client->get($url) call, but this call is being taken from the queue. So, we can just replace this call with $queue($url):

And we are done. All the limiting concurrency logic is hidden from us and is handled by the queue. Now, to scrape the pages with only 10 concurrent requests at a time we should call the Scraper like this:

Method scrape() accepts an array of URLs to scrape, then a timeout for each request and the last argument is a concurrency limit.

Conclusion

It is a good practice to use throttling for concurrent requests to prevent the situation with sending hundreds of such requests and thus a chance of being blocked by the site. In this article I’ve shown a quick overview of how you can use a lightweight in-memory queue in conjunction with HTTP client to limit the number of concurrent requests.

More detailed information about clue/php-mq-react library you can find in this post by Christian Lück.

You can find examples from this article on GitHub.

This article is a part of the ReactPHP Series.

Learning Event-Driven PHP With ReactPHP

The book about asynchronous PHP that you NEED!

A complete guide to writing asynchronous applications with ReactPHP. Discover event-driven architecture and non-blocking I/O with PHP!

Review by Pascal MARTIN

Minimum price: 5.99$Web scraping relies on the HTML structure of the page, and thus cannot be completely stable. When HTML structure changes the scraper may become broken. Keep this in mind when reading this article. At the moment when you are reading this, css-selectors used here may become outdated.

In the previous article, we have created a scraper to parse movies data from IMDB. We have also used a simple in-memory queue to avoid sending hundreds or thousands of concurrent requests and thus to avoid being blocked. But what if you are already blocked? The site that you are scraping has already added your IP to its blacklist and you don’t know whether it is a temporal block or a permanent one.

Such issues can be resolved with a proxy server. Using proxies and rotating IP addresses can prevent you from being detected as a scraper. The idea of rotating different IP addresses while scraping - is to make your scraper look like real users accessing the website from different multiple locations. If you implement it right, you drastically reduce the chances of being blocked.

In this article, I will show you how to send concurrent HTTP requests with ReactPHP using a proxy server. We will play around with some concurrent HTTP requests and then we will come back to the scraper, which we have written before. We will update the scraper to use a proxy server for performing requests.

How to send requests through a proxy in ReactPHP

For sending concurrent HTTP we will use clue/reactphp-buzz package. To install it run the following command:

Now, let’s write a simple asynchronous HTTP request:

We create an instance of ClueReactBuzzBrowser which is an asynchronous HTTP client. Then we request Google web page via method get($url). Method get($url) returns a promise, which resolves with an instance of PsrHttpMessageResponseInterface. This snippet above requests http://google.com and then prints its HTML.

For a more detailed explanation of working with this asynchronous HTTP client check this post.

Class Browser is very flexible. You can specify different connection settings, like DNS resolution, TSL parameters, timeouts and of course proxies. All these settings are configured within an instance of ReactSocketConnector. Class Connector accepts a loop and then a configuration array. So, let’s create one and pass it to our client as a second argument.

This connector tells the client to use 8.8.8.8 for DNS resolution.

Before we can start using proxy we need to install clue/reactphp-socks package:

This library provides SOCKS4, SOCKS4a and SOCKS5 proxy client/server implementation for ReactPHP. In our case, we need a client. This client will be used to connect to a proxy server. Then our main HTTP client will use this proxy client to send connections through a proxy server.

Notice, that this 127.0.0.1:1080 is just a dummy address. Of course, there is no proxy server running on our machine.

The constructor of ClueReactSocksClient class accepts an address of the proxy server (127.0.0.1:1080) and an instance of the Connector. We have already covered Connector above. Create an empty connector here, with no configuration array.

Name ClueReactSocksClient can confuse you, that it is one more client in our code. But it is not the same thing as ClueReactBuzzBrowser, it doesn’t send requests. Consider it as a connection, not a client. The main purpose of it is to establish a connection to a proxy server. Then the real client will use this connection to perform requests.

To use this proxy connection we need to update a connector and specify tcp option:

The full code now looks like this:

Now, the problem is: where to get a real proxy?

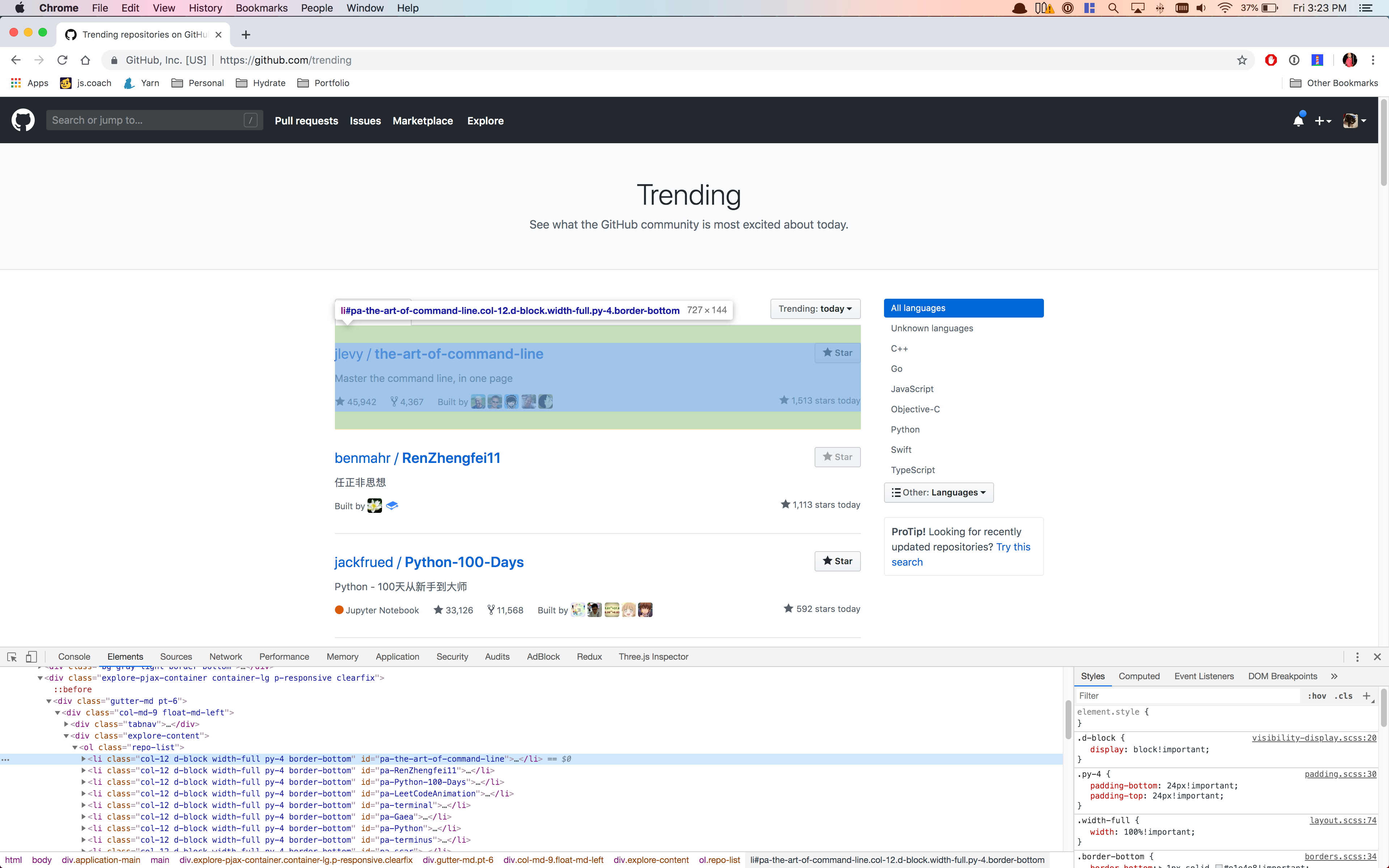

Let’s find a proxy

On the Internet, you can find many sites dedicated to providing free proxies. For example, you can use https://www.socks-proxy.net. Visit it and pick a proxy from Socks Proxy list.

In this tutorial, I use 184.178.172.13:15311.

Probably when you read this article this particular proxy wouldn’t work. Please, pick another proxy from the site I mentioned above.

Now, the working example looks like this:

Notice, that I have added an onRejected callback. A proxy server might not work (especially a free one), thus it would be useful to show an error if our request has failed. Run the code and you will see HTML code of Google main page. Snap converter.

Updating the scraper

To refresh the memory here is the consumer code of the scraper from the previous article:

We create an event loop. Then we create an instance of ClueReactBuzzBrowser. The scraper uses this instance to perform concurrent requests. We scrape two URLs with 40 seconds timeout. As you can see we even don’t need to touch the scraper’s code. All we need is to update Browser constructor and provide a Connector configured for using a proxy server. At first, create a proxy client with an empty connector:

Then we need a new connector for Browser with a configured tcp option, where we provide our client:

And the last step is to update Browser constructor by providing a connector:

The updated proxy version looks the following:

But, as I have mentioned before proxies might not work. It will be nice to know why we have scrapped nothing. So, it looks like we still have to update a scraper’s code and add errors handling. The part of the scraper which performs HTTP requests looks the following:

The request logic is located inside scrape() method. We loop through specified URLs and perform a concurrent request for each of them. Each request returns a promise. As an onFulfilled handler, we provide a closure where the response body is being scraped. Then, we set a timer to cancel a promise and thus a request by timeout. One thing is missing here. There is no error handling for this promise. When the parsing is done there is no way to figure out what errors have occurred. It will be nice to have a list of errors, where we have URLs as keys and appropriate errors as values.So, let’s add a new $errors property and a getter for it:

Then we need to update method scrape() and add a rejection handler for the request promise:

When an error occurs we store it inside $errors property with an appropriate URL. Now we can keep track of all the errors during the scraping. Also, before scrapping don’t forget to instantiate $errors property with an empty array. Otherwise, we will continue storing old errors. Here is an updated version of scrape() method:

React Web Scraper

Now, the consumer code can be the following:

At the end of this snippet, we print both scraped data and errors. A list of errors can be very useful. In addition to the fact that we can track dead proxies, we can also detect whether we are banned or not.

What if my proxy requires authentication?

All these examples above work fine for free proxies. But when you are serious about scraping chances high that you have private proxies. In most cases they require authentication. Providing your credentials is very simple, just update your proxy connection string like this:

But keep in mind that if you credentials contain some special characters they should be encoded:

You can find examples from this article on GitHub.

This article is a part of the ReactPHP Series.

Learning Event-Driven PHP With ReactPHP

The book about asynchronous PHP that you NEED!

React Web Scraper Examples

A complete guide to writing asynchronous applications with ReactPHP. Discover event-driven architecture and non-blocking I/O with PHP!

Review by Pascal MARTIN

React Web Scraper Tutorial

Minimum price: 5.99$